Dataset integrity is a critical factor for the identifiers 8664636743, 604845536, 6956870780, 619084194, 8888970097, and 990875788. Maintaining accuracy and consistency in these datasets is essential for reliable outcomes. Compromised data can lead to significant risks, including breaches and compliance issues. To mitigate such risks, organizations must adopt robust validation and quality assurance practices. Exploring these strategies will highlight their impact on data credibility and organizational trustworthiness.

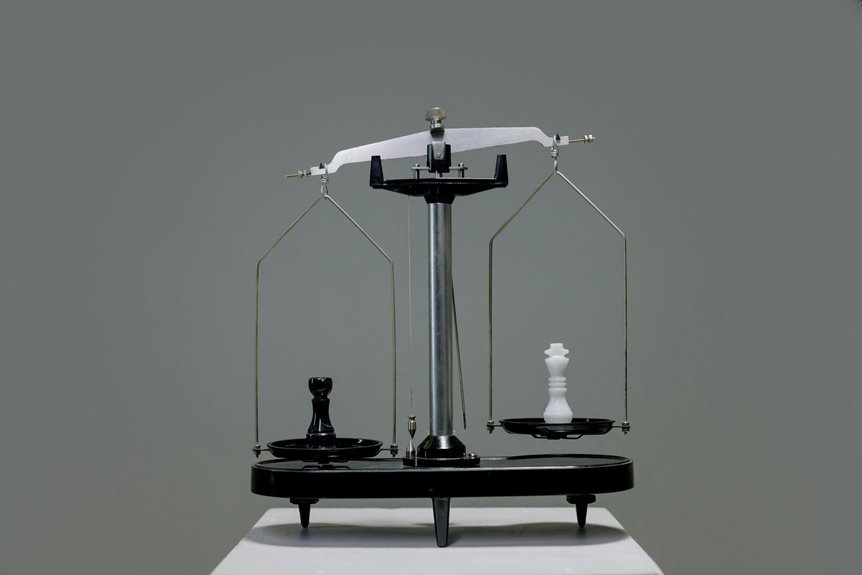

Understanding Dataset Integrity

Dataset integrity encompasses the accuracy, consistency, and reliability of data throughout its lifecycle.

Achieving this integrity requires rigorous data validation processes and comprehensive quality assurance measures. By ensuring that data is verified and meets predefined standards, organizations can maintain trustworthiness in their datasets.

Ultimately, a commitment to dataset integrity empowers users with the freedom to make informed decisions based on reliable information.

Key Components of Data Accuracy

Accurate data serves as the foundation for sound decision-making and effective analysis.

Key components of data accuracy include rigorous data validation processes and the assessment of source credibility. Ensuring that data is verified through systematic checks and derived from reputable sources enhances reliability.

This meticulous approach fosters confidence in the data, empowering stakeholders to make informed decisions based on trustworthy information.

Risks of Compromised Datasets

Compromised datasets pose significant risks that can undermine the integrity of decision-making processes.

Data breaches can lead to trust erosion, exposing organizations to security vulnerabilities and compliance issues.

The ethical implications of mishandling sensitive information can further exacerbate operational disruptions, hindering effective governance.

Addressing these risks is crucial to maintain data integrity and uphold stakeholder confidence in organizational practices.

Strategies for Enhancing Data Reliability

Enhancing data reliability requires a multifaceted approach that integrates robust methodologies and best practices.

Implementing data validation techniques ensures accuracy at the entry stage, while continuous monitoring facilitates the identification of anomalies.

Furthermore, establishing quality assurance measures promotes consistency across datasets, fostering confidence in the data’s integrity.

Collectively, these strategies empower organizations to maintain high standards of reliability in their data management practices.

Conclusion

In conclusion, ensuring dataset integrity is not merely a task; it is the lifeblood of organizational success, akin to a fortress defending against the relentless tides of data breaches and compliance pitfalls. By prioritizing data accuracy, understanding the associated risks, and implementing robust validation strategies, organizations can significantly enhance the reliability of their datasets. This commitment not only bolsters stakeholder confidence but also paves the way for informed decision-making, ultimately safeguarding the organization’s reputation in an increasingly data-driven world.